Biden vs Facebook vs Anti Vaxxers

While Biden and Facebook traded claims about false vaccine information, we found anti vaxxers are working to evade the platform's policies so we keep seeing their conspiracy theories

The US president says Facebook must do more. Facebook says it is removing millions of false claims from its platform. We decided to run a test; how long would it take the average Facebook user looking for facts about Covid-19 to come across anti-vax conspiracy content? The answer; about 20 seconds. The reason could very well be that while governments and big corporations trying to figure out what to do, anti vaxxers are already figuring out how to evade the watchmen, and sharing their insights with others.

In his first Valent newsletter, Yale Jackson Graduate, Juan Carlos examines how much impact efforts to address false vaccine information online are having, and uncovers the tactics anti vaxxers are using to evade censure - Amil

COVID disinformation on Facebook remains disturbingly easy to access

Last Friday, U.S. President Joe Biden said misinformation about COVID on Facebook was “killing people”. The tech giant pushed back, citing its efforts to combat misinformation about the vaccine and claims to have removed 18 million instances of misinformation and ‘fact checked’ or suppressed the visibility of 167 million more.

But how much disruption is enough? It depends, in the end, on the value we put on being vaccinated: if we judge as many governments do, that this is a basic public duty upon which our collective health depends, and that misinformation is likely to persuade some not to get vaccinated, then the bar is high.

So, it is a concern that finding this type of content on Facebook remains disturbingly easy. It took us less than a minute to land on groups and pages that question vaccine safety, minimise the seriousness of the pandemic, share conspiracy theories, and even trade tips and tricks on how to evade Facebook’s attempts to clamp down on such content.

As part of our work with Challenging Pseudoscience at the Royal Institution, we have been looking at 20 UK-focused groups and pages that have been routinely sharing false information.

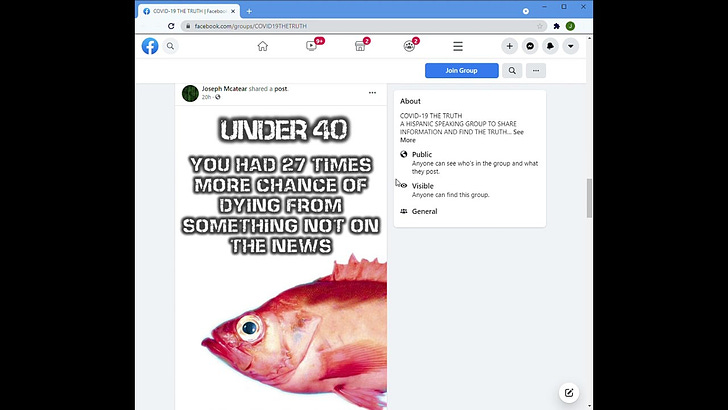

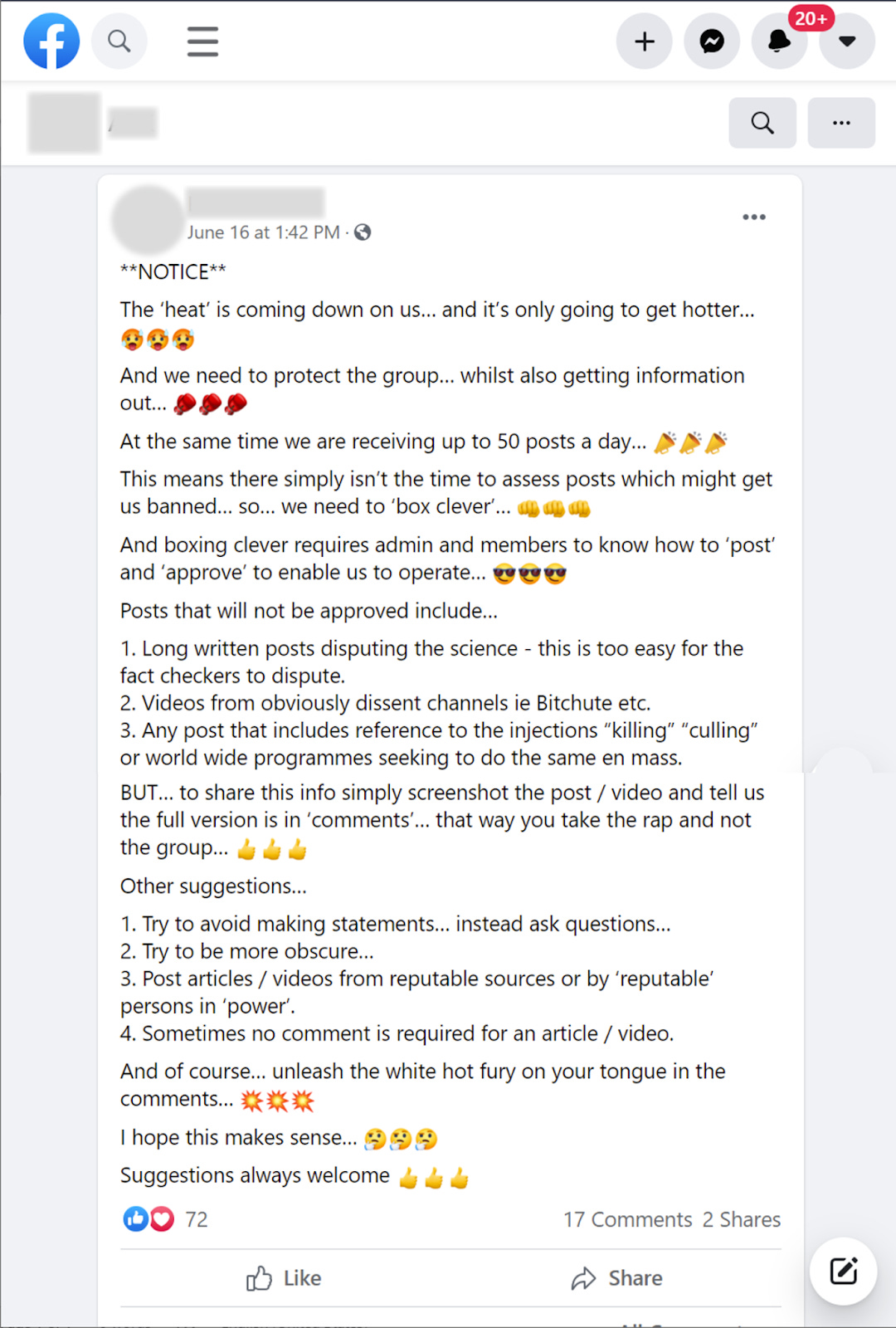

These groups are aware of Facebook’s efforts: we found posts in which administrators reveal methods to avoid fact-checking. In Figure 1, group members are encouraged to use the word “maxine” instead of “vaccine” to stop the algorithm from triggering automatic revisions. In the post shown in Figure 2, the group admin requests that the most lurid, conspiracy posts should only be mentioned in the comments section instead of in ‘main’ posts. This is a response to a recent policy that requires admins to temporarily approve posts when group members have repeatedly violated the Community Standards (for instance, sharing misinformation). By sharing misinformation in the comment section, admins escape accountability. Users are also encouraged to present information that is more difficult to fact check, and to pose statements as questions, to evade fact checking altogether.

Figure 1

We don’t know whether vaccine misinformation is so easy to find because Facebook policies are being subverted, aren’t being applied thoroughly, or for some other reason. Facebook appears, by implication, to be particularly focused on preventing accidental exposure to anti-vaccination theories and more sanguine about those who deliberately seek it out being able to find it. It is hard for researchers external to Facebook to know what data they are using to make these determinations. Facebook has a huge data advantage in this debate, greater even than the might of the US Government who are currently accusing it. The tools Facebook provides to researchers like us, such as Crowd Tangle, which enables us to track content across Facebook is reportedly in danger of extinction. While we know that content moderation works through a combination of algorithmic and human-led efforts (as explained here), we have limited visibility of how Facebook applies this in practice. This is to some degree understandable because revealing too many details of their strategies to detect misinformation might enable misinformation spreaders to further circumvent them. However, this lack of transparency also prevents independent evaluation of their efforts and leads to the extraordinary spectacle of the US President accusing one of America’s largest companies of complicity in killing people.

Figure 2

At the heart of all of these debates is the question of how much Facebook and other social media content actually influences people’s behaviour. We find clearly inauthentic networks with political objectives reaching millions of people, but it is hard to know how what real world impact this has. People are not, as the FT’s Janan Ganesh put it, this week “passive victims of demonic possession” from what they see online. But the ubiquity, frequency, and social reinforcement effects of what we all see online on Facebook pages, groups, via Whatsapp etc. has revolutionised how we receive information about the world. We are a long way from understanding what that revolution might yet mean in terms of how we act. The stakes have been very high during this pandemic. President Biden may be right about Facebook’s complicity: only they have the data to know. We will be looking at the spread of pseudo-science, in particular, looking at the psychological drivers behind the spread of misinformation. We will be sharing our findings.

Juan Carlos Salamanca is a lawyer from Mexico City. He graduated from Yale in 2021 with a Masters in Public Policy in Global Affairs. He focused his studies on tech policy, democracy, free speech, and disinformation. His previous work revolved around personal data protection, ICT law, and international trade and foreign investment